The Challenge

Augmented Reality (AR) is a hot trend spreading quickly throughout industries like social media, navigation, and optometry. While there are many companies that sell glasses and sunglasses, few have explored AR technology to streamline the process of buying glasses. As a part of my DesignLab captstone project, I created Sunglaass, a fictional glasses retailer with an AR app that can display how glasses fit on a person’s face.

Duration

5.5 weeks

Tools

Sketch, InVision, Maze.design, Zeplin

My Process

I focused on the UX and UI of this personal design challenge, creating both the flows, brand, research and testing for the app. Below are the deliverables I produced.

Discover

Research

Interviews & Surveys

Card Sorting

Define

Product Goals & Roadmap

Sitemap

User & Task Flows

Design

Brand & Style Guide

Wireframes

UI Design & Prototype

Deliver

Testing

Iterations

Accessibility Check

Discover

How do users prefer to purchase glasses?

To find out, I did a competitor analysis and industry research to understand how other companies. I found that one third of purchasers are 18-34, while almost half are over 55. Many shop through retail or optometrists, but rarely online. I wanted to explore why that is the case, since (1) the number of online shoppers has steadily increased in the past few years, and (2) mobile augmented reality potentially creates a faster and cheaper shopping experience. I created provisional personas that reflect the demographics mentioned in the research to understand which user groups to interview.

I conducted 6 in-person or phone interviews and issued a survey that received 32 responses from individuals in my social network.

Interview Quotes

Survey & Interview Themes

A majority of users prefer to shop in stores across all age and gender groups. They shop for sunglasses at a variety of places.

When shopping, interviewees cited they would like a second opinion when selecting glasses.

Majority of users said they always consider style and color when choosing glasses, more than the price.

Most users shopped at a location because it was convenient.

✳ Two main user groups emerged: users who have particular taste and want to shop in person, and users shop somewhere because it is convenient.

Define

The survey showed me that most users shop for glasses in stores out of convenience (i.e. when they are in a shopping mall) or during an optometrist visit. They also seek affirmation from other people to check if certain glasses look good on them. Since there were two common scenarios (those that purchase at the optometrist, and those that buy only when they are out shopping), I created two personas to embody their needs:

Charlotte, the particular shopper that prefers to shop in person, and Damien, the shopper seeking convenience.

Their goals, needs, and opinions are formed from real user feedback and conveyed in Empathy Maps below.

Personas

Click on the image to enlarge

Empathy Maps

Click on the image to enlarge

User Flows

Click on the image to enlarge

How Might We Statements

How might we allow users to find the perfect pair of glasses more easily?

I brainstormed how might we (HMW) statements to consider multiple ways to solve the personas’ problems when shopping for glasses. Then I curated the features into a product roadmap that would solve the shopping problem with an iOS app.

✳ By exploring the motivations behind these user groups, I found that I needed one solution to solve two problems:

For particular shoppers, I needed to find a solution that would allow them to try on glasses to make sure they fit and look good.

For convenience shoppers, I needed to find a convenient and affordable way to shop.

Design

Now that I defined the problem (shopping for glasses), it was time to construct how the app would be organized (information architecture) and how it would look (interface design).

I began by conducting a closed card sort, where users had to put terms into pre-defined categories. This helped me confirm how users would organize information (things like glasses color, material, etc).

Card sort results

Click on the image to enlarge

I created a sitemap, user flows, and task flows as well to specify a possible order of screens that the app can follow.

App Sitemap

Click on the image to enlarge

Below are some of my sketches and medium fidelity wireframes. I went through a couple of different layouts for the catalog page, since it is where users might return back to most often.

Wireframes and Sketches

Click on the image to enlarge

Afterwards, I designed the branding for Sunglaass, the company building the app. I also developed a user interface kit consistent with the brand. I wanted to make it pop and appealing to the young user base I discovered during my research.

As a final check, I referred to the Apple Human User Interface Design guidelines to ensure that the designs considered users with visual impairments. I made sure that my style guide and assets followed recommended sizes and layout consistency.

The styleguide can be found below and on this Zeplin page.

Deliver

I used remote testing on Maze.design to gather feedback on how they would navigate the app. I chose remote testing because I wanted to get a larger volume of testers to try the prototype in a short amount of time.

I went through two testing cycles, observing the heatmaps, misclick rate, bounce rate, and user comments on the prototype to improve on my mock up screens.

First I received 10 responses. Overall, the face measuring and add to cart features proved the most challenging for users. I learned that the onboarding process misguided the most, perhaps since there were multiple areas on the screen with the same colors as other buttons.

Test Results - Iteration 1

I also noticed that while it is an iOS app, most users were having issues with the swiping gestures. This may be because they tested it on their computers. Because of this, I removed any swiping features and hotspots where they could misclick where they intended to go.

Iteration 1 Results

Click on the image to enlarge

Test Results - Iteration 2

I tested my hypotheses in a second iteration. I made some changes and elaborated on some screens, and received 18 responses. While the results were better, some users were still trying to click around the screen during the onboarding process.

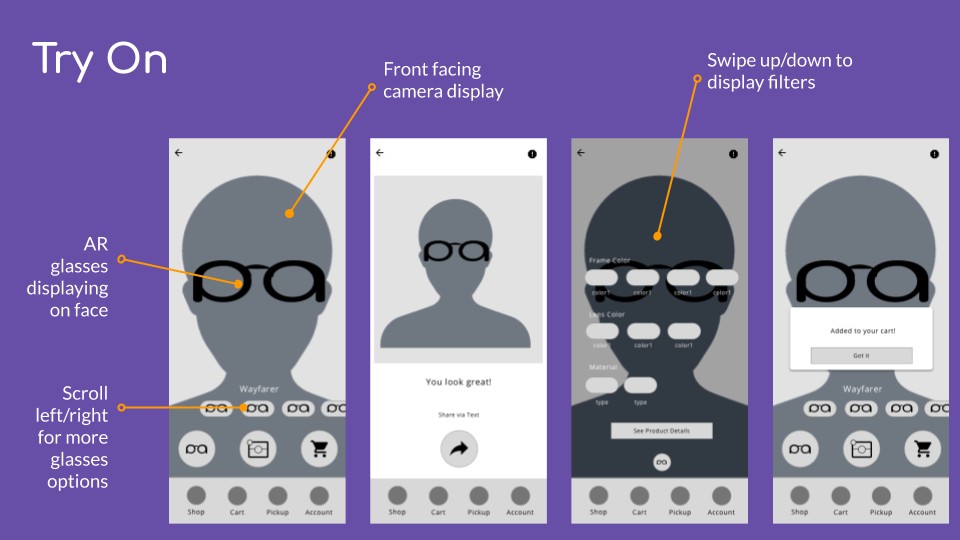

User Interface & Prototype

Click to Explore

Lessons Learned & Next Steps

Developing an iOS app was definitely a unique challenge, especially for AR! However, designing visuals for a mobile device was definitely the highlight of this project.

From Sunglaass, my main takeaways for future improvements are:

Designing with less real estate

Some testers reported that they got lost and encountered some buggy hotspots in my prototype. This might have been because of buttons that were close to each other. I am working with a smaller screen, prioritizing what and where items go was more challenging. Compared to my previous projects, I had to build many more screens and flows.

The challenges of onboarding

Creating an onboarding flow was both fun and challenging. There was a fine line between giving users too much information and not enough. Throughout my flow, my heat maps showed that users must be directed very carefully. In my onboarding, I suspect that I highlighted too many buttons and items on the screen, contributing to more misclicks. In my future designs, I will consider simplifying my onboarding flow to make it more streamlined.